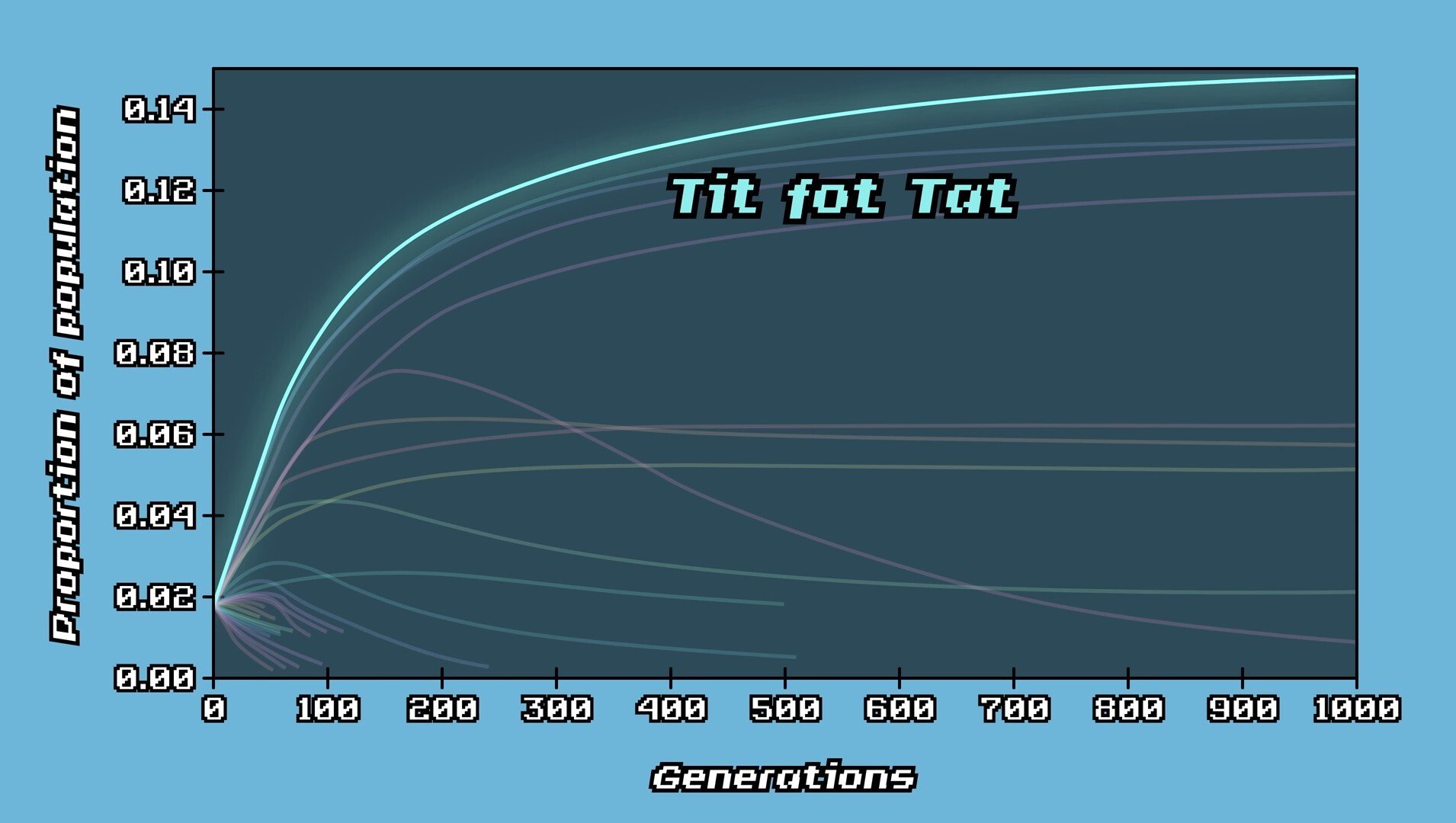

They all fail at game theory. When being negative, everyone loses. Tit for Tat + 10% forgiveness is the most successful and highest growth potential. T4T means you are always nice, always positive, and when someone is negative, you respond in kind but randomly forgive 10% of the time to exit the stupidity spiral. Most world leaders know and operate under T4T now that it was established as the only path to maximal growth for everyone. Failing to apply this when everyone else is applying it will ALWAYS result in bringing everyone down but the most damage will ALWAYS occur to the perpetrating entity when all others are playing T4Tpt.

Need max AVX instructions. Anything with P/E cores is junk. Only enterprise P cores have the max AVX instructions. When P/E are mixed the advanced AVX is disabled in microcode because the CPU scheduler is unable to determine if a process thread contains an AVX instruction and there is no asymmetrical scheduler that handles this. Prior to early 12k series Intel, the microcode for P enterprise could allegedly run if swapped manually. This was “fused off” to prevent it, probably because Linux could easily be adapted to asymmetrical scheduling but Windows would probably not. The whole reason W11 had to be made was because of the E-cores and the way the scheduler and spin up of idol cores works, at least according to someone on Linux Plumbers for the CPU scheduler ~2020. There are already asymmetric schedulers in Android ARM.

Anyways I think it was on Gamer’s Nexus in the last week or two that Intel was doing some all P core consumer stuff. I’d look at that. According to chips and cheese, the primary CPU bottleneck for tensors is the bus width and clock management of the L2 to L1 cache.

I do alright with my laptop, but haven’t tried R1 stuff yet. The 70B llama2 stuff that I ran was untenable for CPU only with a 12700 with just CPU. It is a little slower than my reading pace when split with a 16 GB GPU, and that was running a 4 bit quantization version.